Confused by all the new terms you encounter in the world of VR? Use our handy glossary to make sense of it all!

Early Access status

This means that it’s not finished yet, but you can still buy it and start playing with it. You might wonder why you would pay for something which is unfinished. That’s really up to you. You can wait until it’s ready or see its current state. Sometimes when apps come out of early access status, the price will be higher. You might also have some influence over how it’s developed as an early adopter. If that doesn’t sound appealing, wait until it’s ready. More info.

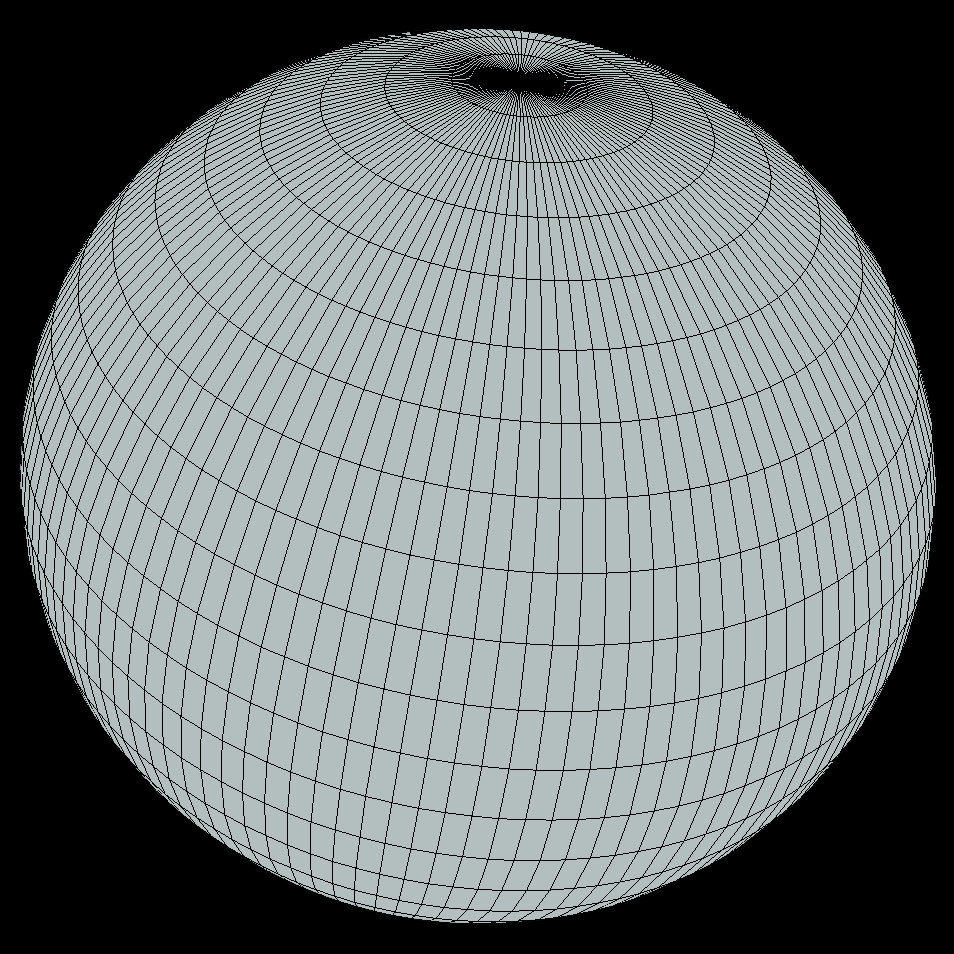

3D model

Also known as 3D object or mesh

3D/VR graphics are more than just placing objects next to each other. Every item can be made, modified, or found to fit the right look. 3D models are built from vertices and triangles. Each vertex is an x,y,z point in space, and triangles are the surfaces formed by those points. This creates something called a mesh, which can be thought of as a sculpture made out of chicken wire. It forms the shape but doesn’t look like much yet. The next step is to cover that mesh with color and texture, like applying papier-mâché. This creates the objects around the scene which really come to life as you add light sources and reflections.

Locomotion

In virtual reality, you usually have a limited amount of space to physically walk around (your living room, for example). Within the app, you can usually either walk around physically, or move using your controllers. The most common options are smooth movement (gliding in a given direction) or teleportation (selecting a spot a certain distance away to jump to).

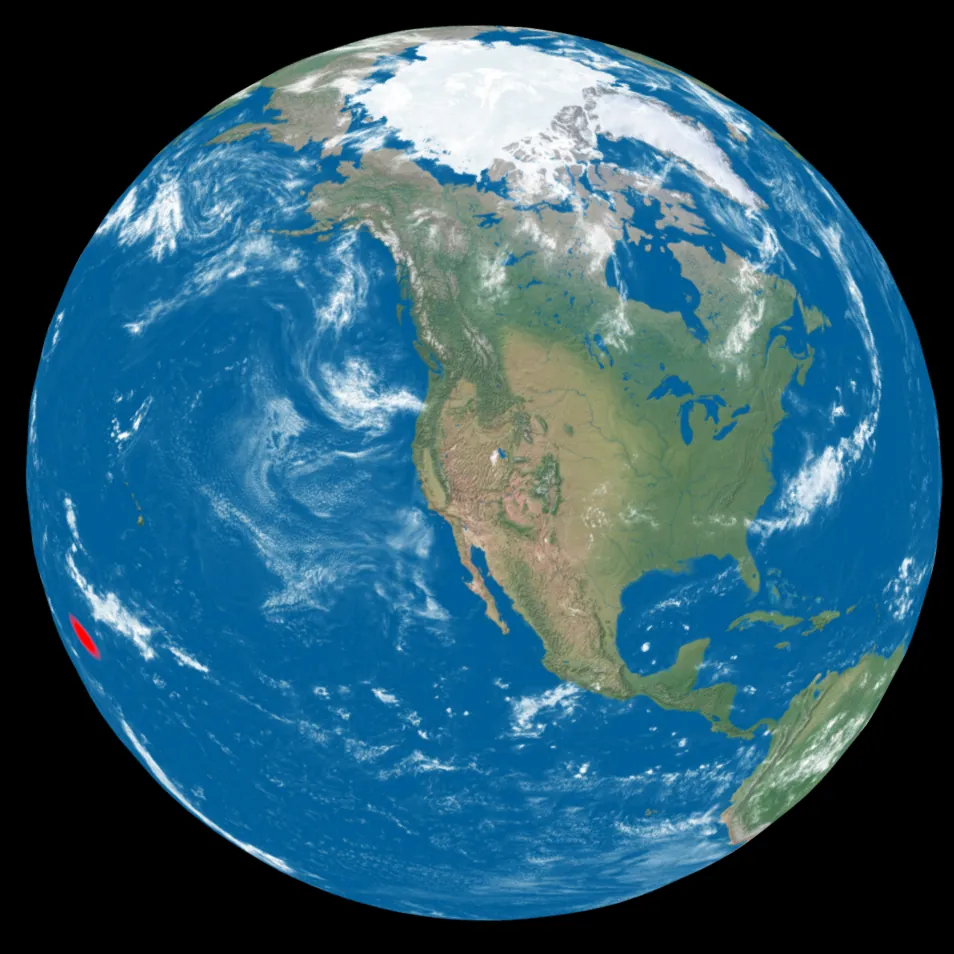

Photogrammetry

Also known as photo scanning

Capturing an environment using a very large number of photographs from every angle to create a 3D model. More details.

LiDAR

Technology used to create a 3d scan of an environment. It captures the depth of every point in the scene and is often used with photogrammetry. More details.

Eye tracking

Also known as gaze tracking

Additional sensors in some headsets that can detect eyes being open or closed and what direction the wearer is looking. Useful for foveated rendering and also to provide a more natural interaction with your virtual environment.

Foveated rendering

Can also be used to increase visual quality by rendering a scene at the highest level in your direct field of view and reduce the quality for peripheral vision.

Body tracking

Also known as skeletal tracking

Various methods for determining the location and orientation of your body. Traditionally, VR headsets provide information about your head along with tracking where the controllers are. This means that your chest, shoulders, arms, elbows, hips, knees, legs, and feet are unknown. It’s possible to approximate your positioning based on very few points. For example, if we only have the head, we can assume that the body is under it. If we know your height, we can determine if you are likely standing or sitting. Knowing hand positions from the controllers allows some idea of where your arms must be.

These assumptions are made using a process called inverse kinematics that uses knowledge of the parts of your body, their connection to each other, and constraints caused by bones and muscles. It’s possible to buy tracking devices that attach to your body or that observe you using one or more cameras in the room, like motion capture for movies. With more data points, the approximation can be made more accurate which can lead to better VR immersion.

Standalone headset

A headset that runs apps on it directly without need of connection to a PC. Contrasted with PCVR. This includes headsets such as the Meta Quest series, Vive Focus/XR Elite/Flow, and the Apple Vision Pro. For a more complete list, checkout vr-compare.com.

Inside-out tracking

This is the primary way that headsets track where we are. Sensors in the headset and possibly the controllers build up an image of the space around you and call tell when you move around. Even systems with base stations, such as the HTC Vive and the Valve Index, track from the headset (looking out). They just look for the base station beacons rather than the visuals of the space. Inside-out tracking is contrasted with external tracking where cameras/sensors look inward toward the player, similar to how motion capture for games and movies is done.

Volumetric capture

Typically referring to a method of recording video sequences. As mentioned under photogrammetry, capturing items and scenes in the real world requires a huge number of images which are then analyzed and processed to create a 3d model. When working with things that move, like the performance of a person or animal, not only must this process be repeated for every frame, but the changes to these resulting models must also then be encoded into a format optimized for playback. Because of all the challenges in this process, it’s worth noting when it’s done. The alternative is to overlay flat video sequences into a 3D scene and hope the player doesn’t look too closely (also known as billboarding).

Point cloud

A representation of a scene consisting of sparse points in space, each representing a spot on a line or surface. These typically originate from a laser depth scanner shooting a beam toward a scene and calculating the time it takes to bounce back. The scanner shoots out beams in every direction from a given spot and is then moved to different spots to record the scene.

VR180

A media format based on using two 180° fisheye lenses spaced similarly to the distance between human eyes (65mm). The dual lenses create a stereo 3D view, and the fisheye results in a distorted image that captures the up/down/left/right peripheral views. Cameras typically support taking both still images and videos. When viewed/played in the right software, the image is reprojected (undistorted) so you can look around naturally (just not look behind). Cameras that can capture VR180 include the Calf VR180, Calf x Visinse, Canon DSLR’s (with a special lens), and others.